Vibe-Coded US Tariffs: Why There Needs to Be a SMART Human in the Loop

When commentary emerged today suggesting that the White House had used a ChatGPT formula to set international tariffs, people thought it must have been a late April Fools Day joke.

But no - this is plainly AI-inspired decision-making gone wrong—and it illustrates that governance is a problem, even at the highest levels of government.

What Happened?

Analysts noticed something odd in the U.S.’s latest tariff announcements: the numbers didn’t add up. The expected models—based on reciprocal rates and traditional trade formulas—failed to explain the choices. Then journalist James Surowiecki reverse-engineered the numbers and proposed a simple equation:

Tariff = (Exports to US) ÷ (Imports from US)

The administration denied it outright—and published what they claimed was the real formula. A quick application of Year 7 math to it, however—substitute the fancy Greek symbols for the constants they are—gives you Surowiecki's formula.

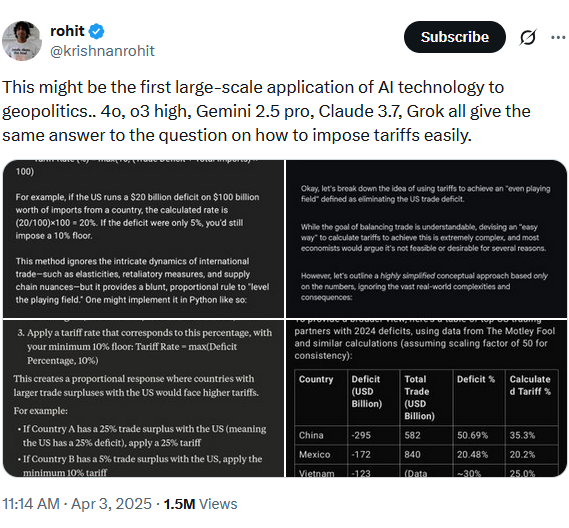

What's AI's role in this? @krishnanrohit asked today’s leading AI models (ChatGPT, Claude, Gemini, Grok) how to create a “simple, reciprocal tariff policy,” and the responses mirrored the exact approach the White House used.

This prompted Rohit to quip:

“This might be the first large-scale application of AI technology to geopolitics.”

It may have been tongue-in-cheek, but the implications are not.

The Governance Gap

If an AI—or an adviser using AI—suggests a “dumbed-down” formula to guide complex economic policy, and that advice goes unchecked, it reveals a dangerous vacuum in oversight. This isn’t about blame. It’s about gaps in governance.

What Good Governance Would Look Like

Referencing our AI Governance Checklist, any AI-derived policy or model should be evaluated against core principles:

- Accountability – Who approved the use of the formula? Were trade economists consulted?

- Transparency – Can the formula and its rationale be explained in plain language?

- Validation – Was the approach tested for accuracy, fairness, or strategic fit?

- Human Oversight – Were domain experts in the loop, or was it “vibe-coded” and rubber-stamped?

- Audit Trail – Can we trace how the decision was made and by whom?

These are not theoretical concerns. Poorly governed AI inputs—especially in high-stakes environments like geopolitics—will, in this case, lead to economic harm, diplomatic fallout, and loss of reputation.

The Takeaway

AI agents are powerful, accessible, and increasingly embedded in public and corporate decision-making. But without a smart human in the loop and a sound governance framework around that loop, we risk sleepwalking into an error-ridden future.